NPS, CSAT/CDSAT, and CES

Background. In 2010, a new powerful tool for evaluating customer experience emerged – the CES (Customer Effort Score). This innovative strategy was developed by consultants from the CEB firm. The idea was to determine the likelihood of repeat purchases based on the customer’s answer to a single question. The beauty of this concept is that the question must really be only one (as it’s widely known that with an increase in the number of questions, fewer customers make it to the end of the survey). CES turned out not merely as an indicator, but it also, by virtue of its nature, allowed the survey of the maximum numbers of customers.

From the perspective of the people at CEB, neither the NPS index, nor the CSAT/CDSAT pair nor any modifications of CSI were fit to serve as loyalty predictors (i.e., readiness to purchase or recommend a company’s products). By that time, in July 2010, an article called “Stop Delighting Your Customers” had already been published in the “Harvard Business Review”, suggesting that the correlation between satisfaction and loyalty was low.

Incidentally, upon careful consideration, this is logically obvious because loyalty can be of different types. For example, if you need to fly to Norilsk, and only one airline offers flights there, you’ll have to pay for the ticket, even if it costs as much as one to New York. It can’t be helped: it’s enforced loyalty to the monopolist.

According to the figures presented in the article, it turns out that 20% of satisfied customers are planning to stop making purchases, while 28% of dissatisfied ones would continue to buy. This proves the hypothesis that satisfaction indicators (CSAT/CDSAT and CSI) don’t provide marketing services with the right conclusions about future customer behavior.

NPS as a loyalty indicator turned out to be slightly better, but due to its internal defects, it wasn’t much help either. Why is NPS defective? The web offers serious critiques of this indicator, but to stay focused on the aim of the article, I’ll mention the two main flaws:

- Even with identical NPS values, the distribution of detractors, neutrals, and promoters can vary. That is, two brands with different levels of audience loyalty may be marked with the same number.

- The NPS score greatly depends on how much time has passed after customer contact with the company, in other words, on the measurement methodology.

What did the experts at CEB come up with? The question for measuring CES was initially formulated as: “How much effort did it take you to resolve your issue?” It implied one of five response options, where 5 meant “a lot of effort”, and 1 – “very little”.

The idea was good and the thought was correct, but it turned out that there were problems. Firstly, with the translation. For example, in the original there were “How much” and “Request”, where “how much” is semantically correct and corresponds to the meaning of the task. But with direct translation into some languages, problems with understanding the question arose.

Secondly, it turned out that the scale from 1 to 5 is perceived differently in different countries. Some people are used to the idea that 1 means “excellent” (hypothetically corresponds to the first place on the podium), while in other places, 1 was understood to be less than unsatisfactory.

In the process, we stumbled upon a disturbing detail. If the phrasing changed to “to address your problem” instead of “to solve your problem”, meaning, a gerundial noun was used instead of a verb, this could lead to false positive responses, even if the problem technically wasn’t solved.

CES 2.0

The team from CEB modified the question and derived the CES (customer effort score) 2.0, free from previous shortcomings. The new question was: “Do you agree that the company has made it easy for you to solve your problem?”. There are now seven possible responses instead of five. By the way, seven point scales are better than five and ten point scales; for instance, a five point scale doesn’t allow for a “five minus”, and in a ten point scale it’s unclear how to distinguish an “eight” from a “nine”.

Interestingly, neither CES 2.0 nor its predecessor CES have found widespread application in the field of customer service and customer experience management. Perhaps the PR for the indicator was insufficient or it proved too complex to be understood by company managements. There’s a chance that the mere phrasing of the CES question triggers rejection, for example, from a customer experience director: “What do you mean, the company doesn’t give the opportunity to solve the problem?” For him, that means that he either is not doing or poorly doing his job.

Important fact. It turns out that the change in customer loyalty is nonlinear. After reaching a rating of 5, for each additional point up to 7, loyalty increases by only 2%, whereas it can grow by 20% prior to the score of 5. Meaning that 5 is the threshold value, after which there should be an economic justification for whether to increase investment in solving customer problems or leave everything as it is. It might very well be that the increase in CES 2.0, for example, from 5 to 6, will consume so many resources that it will be unprofitable.

Prospects. From the author’s point of view, the measurement of CES 2.0 should not negate the parallel measurement of NPS and satisfaction indicators. If under specific circumstances, correlations arise or, conversely, disappear between the indicators, it will be a good reason to pose questions to analytical departments.

CES 3.0

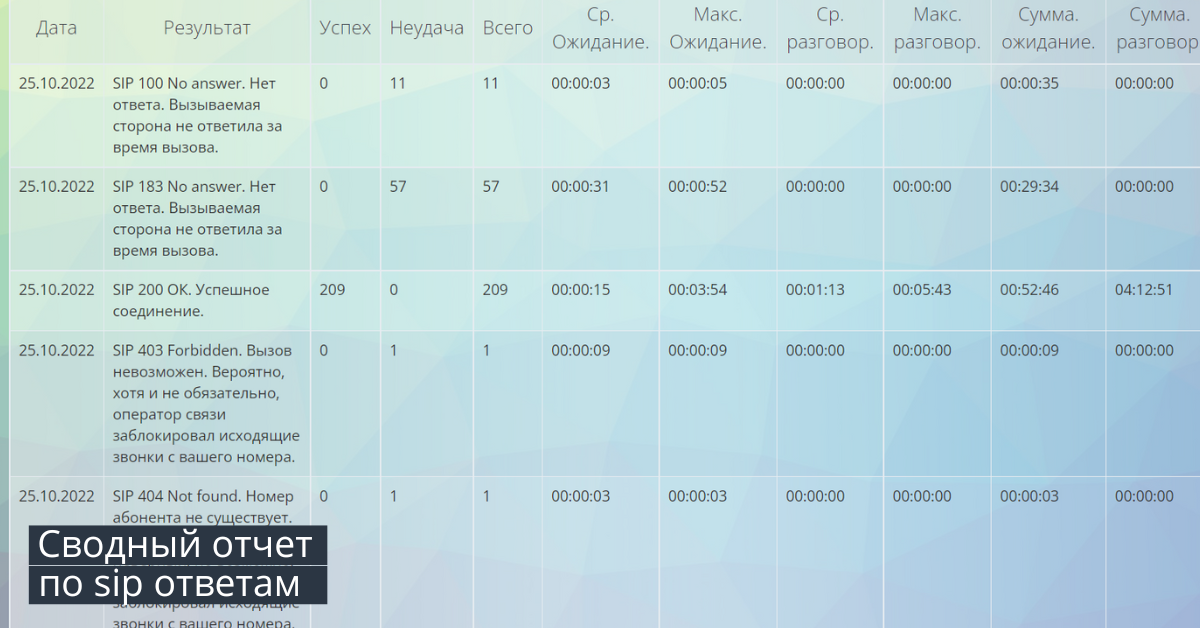

Following the use of CES (customer effort score) 2.0, it is not sufficiently informative to manage it. Example: suppose the value dropped (by the way, the indicator can be measured with IVR or bots in chats and messengers), we have 20 agent groups, two support lines, a product line of 1,000 products. We look at the drop and really need to dig in to find the cause, which, by the way, may not be one, but overlapping with another. Therefore, a multidimensional CES 3.0 is needed, which would be simultaneously measured for:

- Products or product groups

- Customer query content

- Agent groups

- Supervisors of agent groups (even the same people might perform differently under different supervisors)

- Ongoing marketing activities

Such multi-dimensionality will allow the new CES to quickly identify problems. However, it will require a new type of “smart” signaling instead of BI-panels and a multitude of indicators on dashboards, the automation should be able to provide pre-conclusions in text form: “During the promotion for product G 07.01.21, customers experienced the greatest difficulties with question Q in agent group №3 under supervisor S”.

At Oki-Toki, you can create an automatic customer survey script using any of our techniques, and the results can be saved in our internal CRM or pulled into AMOCRM, Bitrix24, or your own CRM via integrations. In addition to the metric mentioned, you can use automatic speech analytics and conversation evaluation forms to identify and rectify agent errors, which are also present in Oki-Toki.